Gaussian distributions in models

Classification model

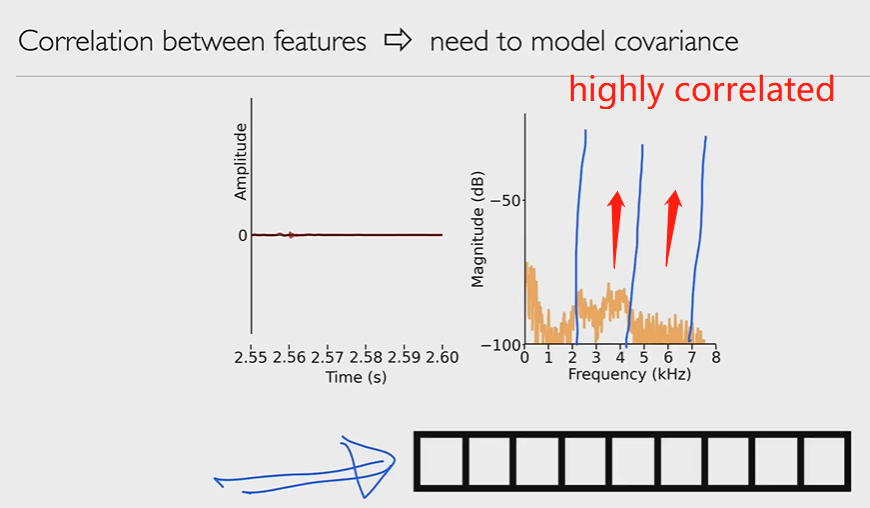

If features are highly correlate with each other, we can solve this correlation by rotating the axis, by PCA.

Gaussian distribution of classification result of feature vector

Decision boundary

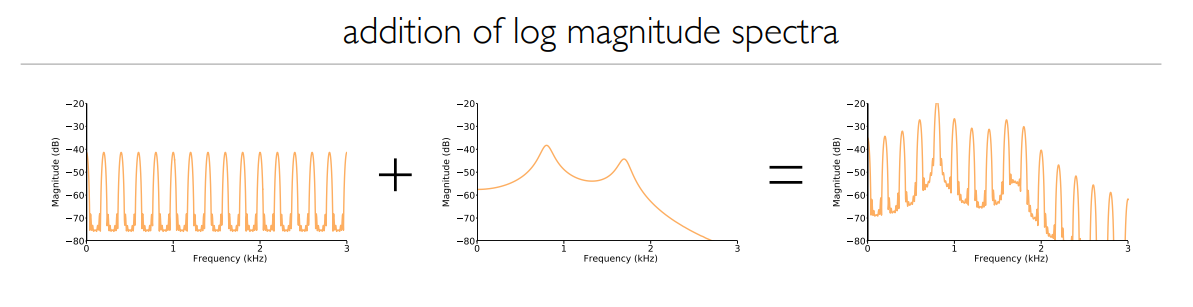

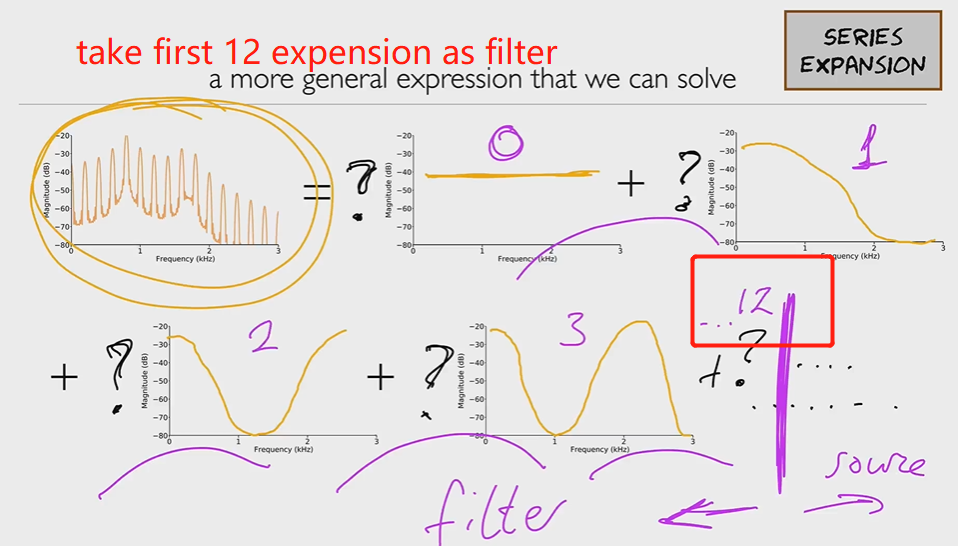

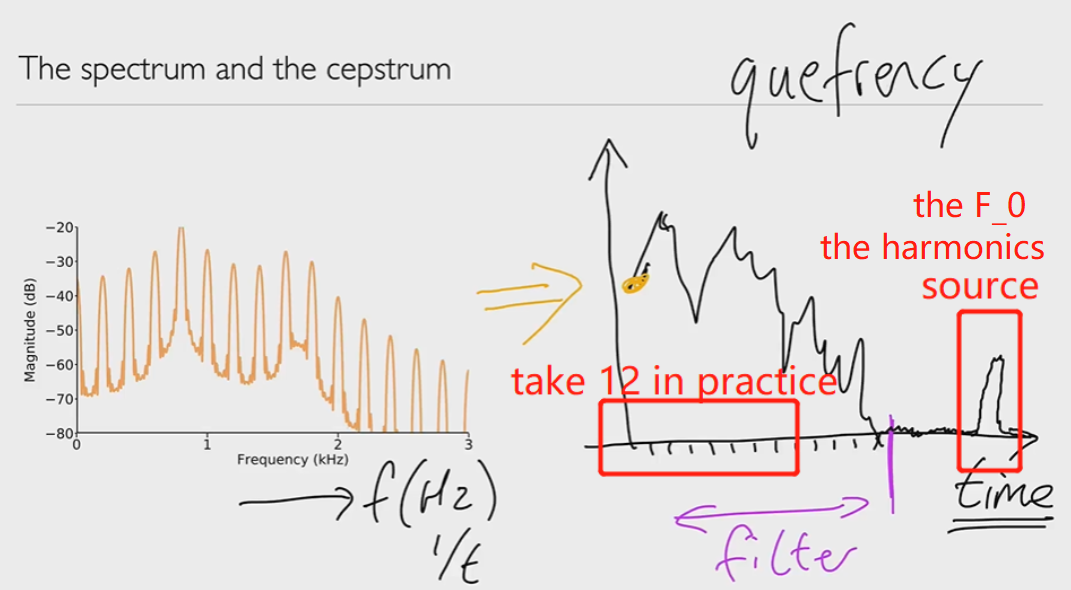

Cepstral Analysis, Mel-Filterbanks

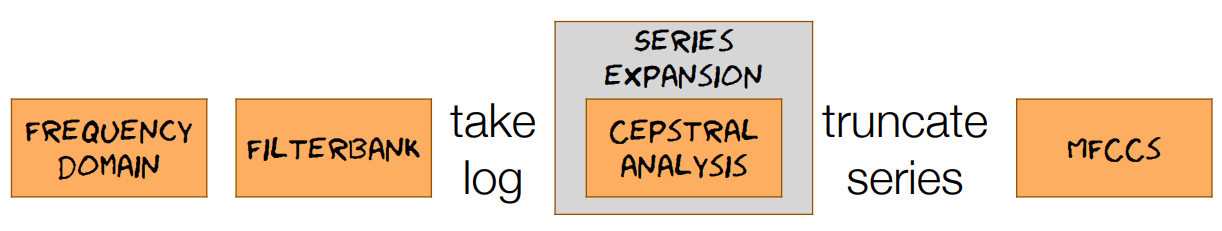

We now start thinking about what a good representation of the acoustic signal should be, motivating the use Mel-Frequency Cepstral Coefficients (MFCCs).

Since the feature in a feature vector is correlated, if we want to use gaussian, we have to solve this correlation problem.

In TTS

In ASR, we can decompose the input further

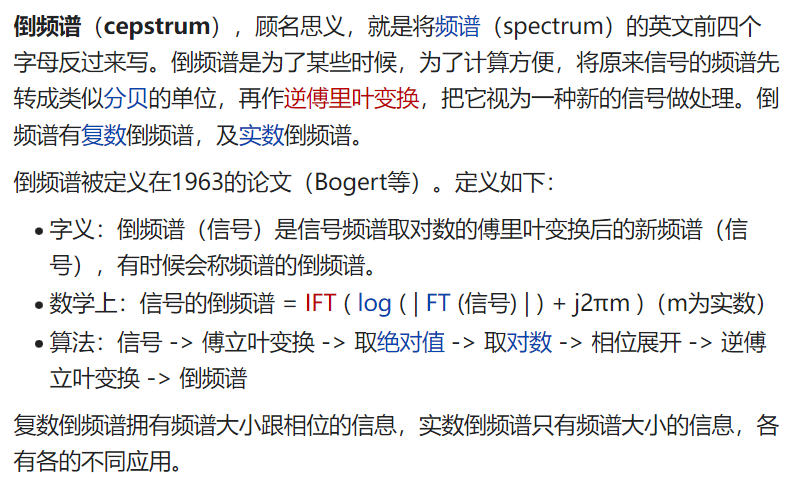

The independent variable of a cepstral graph is called the quefrency.

The quefrency is a measure of time, though not in the sense of a signal in the time domain.

Then the first 12 value in cepstrum is our result feature vector, after feature engineering.

Then we can solve the correlation problem discussed in previous, by using MFCCs.

MFCCs

Overview of steps required to derive MFCCs, moving towards modelling MFCCs with Gaussians and Hidden Markov Models

Origin: Module 8 – Speech Recognition – Feature engineering

Translate + Edit: YangSier (Homepage)

:four_leaf_clover:碎碎念:four_leaf_clover:

Hello米娜桑,这里是英国留学中的杨丝儿。我的博客的关键词集中在编程、算法、机器人、人工智能、数学等等,点个关注吧,持续高质量输出中。

:cherry_blossom:唠嗑QQ群:兔叽的魔术工房 (942848525)

:star:B站账号:白拾Official(活跃于知识区和动画区)