1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

|

import numpy as np

import sklearn.model_selection as ms

import sklearn.svm as svm

import sklearn.metrics as sm

import matplotlib.pyplot as mp

x, y = [], []

with open("../data/multiple2.txt", "r") as f:

for line in f.readlines():

data = [float(substr) for substr in line.split(",")]

x.append(data[:-1])

y.append(data[-1])

x = np.array(x)

y = np.array(y, dtype=int)

params = [

{"kernel": ["linear"],

"C": [1, 10, 100, 1000]

},

{"kernel": ["poly"],

"C": [1],

"degree": [2, 3]

},

{"kernel": ["rbf"],

"C": [1, 10, 100, 1000],

"gamma": [1, 0.1, 0.01, 0.001]

}

]

model = ms.GridSearchCV(svm.SVC(), params, cv=5)

model.fit(x, y)

print("best_score_:", model.best_score_)

print("best_params_:\n", model.best_params_)

l, r, h = x[:, 0].min() - 1, x[:, 0].max() + 1, 0.005

b, t, v = x[:, 1].min() - 1, x[:, 1].max() + 1, 0.005

grid_x = np.meshgrid(np.arange(l, r, h), np.arange(b, t, v))

flat_x = np.c_[grid_x[0].ravel(), grid_x[1].ravel()]

flat_y = model.predict(flat_x)

grid_y = flat_y.reshape(grid_x[0].shape)

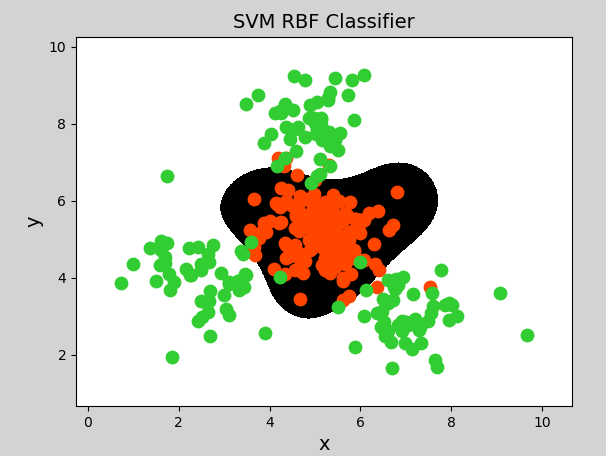

mp.figure("SVM RBF Classifier", facecolor="lightgray")

mp.title("SVM RBF Classifier", fontsize=14)

mp.xlabel("x", fontsize=14)

mp.ylabel("y", fontsize=14)

mp.tick_params(labelsize=10)

mp.pcolormesh(grid_x[0], grid_x[1], grid_y, cmap="gray")

C0, C1 = (y == 0), (y == 1)

mp.scatter(x[C0][:, 0], x[C0][:, 1], c="orangered", s=80)

mp.scatter(x[C1][:, 0], x[C1][:, 1], c="limegreen", s=80)

mp.show()

|