1. Gradient Descent Approach in Robotics

1.1. Demo Case Study: Optimizing Path Planning for an Autonomous Vehicle

- Super Domain: Optimisation in Robotics

- Type of Method: Numerical Optimization Technique

1.2. Problem Definition and Variables

- Objective: To find the optimal path for an autonomous vehicle to navigate from a starting point to a destination while minimizing a cost function.

- Variables:

- $\mathbf{x}$: Vector representing the state of the vehicle (position, velocity, etc.).

- $f(\mathbf{x})$: Cost function to be minimized, e.g., distance, time, or energy consumption.

1.3. Assumptions

- The cost function is differentiable with respect to the state variables.

- The environment is static and can be represented as a known cost landscape.

1.4. Method Specification and Workflow

- Initialization: Start with an initial guess for the state vector $\mathbf{x}$.

- Gradient Calculation: Compute the gradient of the cost function, $\nabla f(\mathbf{x})$, representing the direction of steepest ascent.

- Update Step: Update the state vector in the direction of the negative gradient to move towards the minimum.

- Update rule: $\mathbf{x}{new} = \mathbf{x}{old} - \alpha \nabla f(\mathbf{x}_{old})$, where $\alpha$ is the learning rate.

- Iteration: Repeat the gradient calculation and update steps until convergence criteria are met (e.g., changes in cost function are below a threshold).

1.5. Strengths and Limitations

- Strengths:

- Widely applicable to a variety of optimization problems in robotics.

- Conceptually simple and easy to implement.

- Can be adapted to different environments and cost functions.

- Limitations:

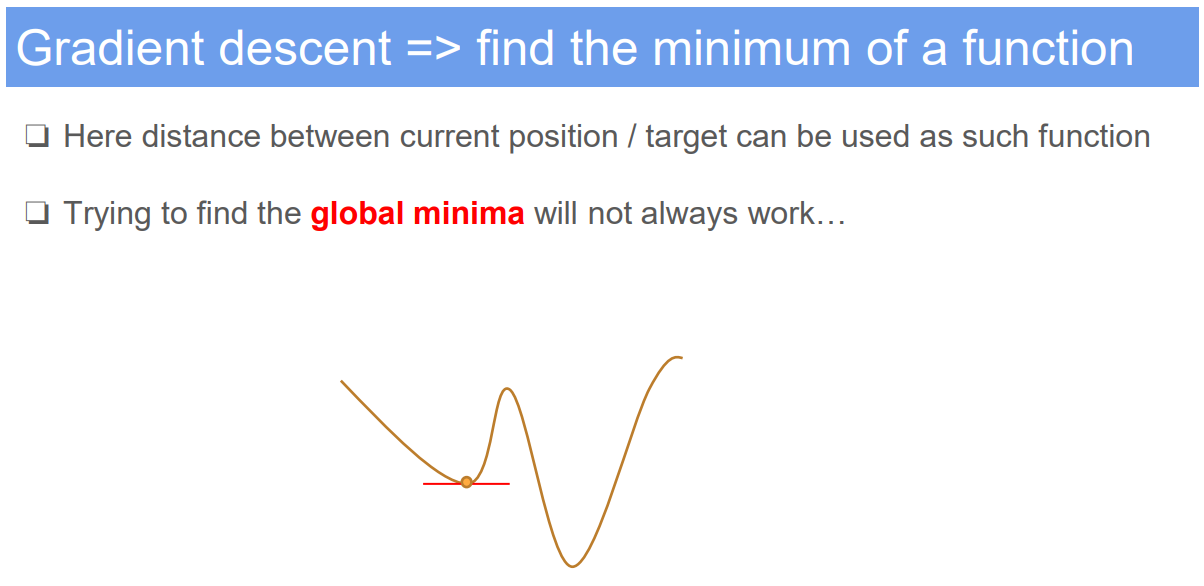

- Convergence to the global minimum is not guaranteed, especially in non-convex landscapes.

- Choice of learning rate $\alpha$ is critical and can affect the speed and success of convergence.

- Computationally intensive for high-dimensional problems.

1.6. Common Problems in Application

- Getting stuck in local minima, missing the global minimum.

- Oscillations or divergence if the learning rate is too high.

- Slow convergence if the learning rate is too low or if the gradient is shallow.

1.7. Improvement Recommendations

- Use adaptive learning rate techniques to adjust $\alpha$ dynamically.

- Implement advanced variants like stochastic gradient descent for large-scale problems.

- Combine with other optimization strategies (e.g., genetic algorithms, simulated annealing) to escape local minima.

2. Assumption: sensor accurate reading

If we remove this assumption we need Kalman Filter.

Reference: The University of Edinburgh, Advanced Robotics, course link

Author: YangSier (discover304.top)

🍀碎碎念🍀

Hello米娜桑,这里是英国留学中的杨丝儿。我的博客的关键词集中在编程、算法、机器人、人工智能、数学等等,持续高质量输出中。

🌸唠嗑QQ群:兔叽の魔术工房 (942848525)

⭐B站账号:白拾Official(活跃于知识区和动画区)

Cover image credit to AI generator.